This post is the fifth part of the series about Kubernetes for beginners. In the

fourth part, I started to write about the Kubernetes

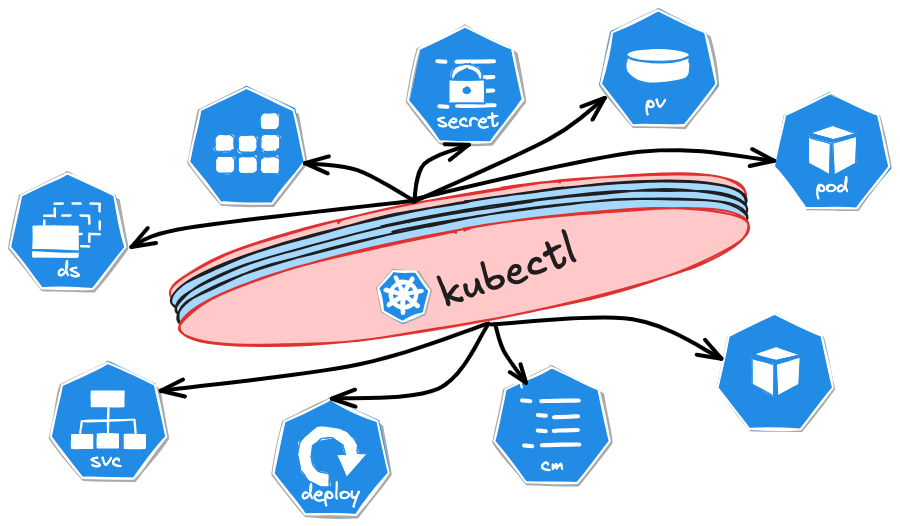

Swiss army knife: kubectl. I covered ways how to find the components you are looking

for. In this episode, I’ll show you how you can gather more information about Kubernetes

components, focusing on status and logs. This can be useful when debugging your

application and trying to figure out what’s going on.

Let’s start…

The last time I created some Deployment with kubectl, I used the following command:

❯ kubectl create deployment nginx --image=nginx

As well, I created a Pod with the following command:

❯ kubectl run nginx --image=nginx

At the end of the previous post, I showed you how to see those resources’ manifests. For example, for the Pod:

❯ kubectl get pod nginx -o yaml

Describing Resources

The most interesting part is the status of the Pod. You can check that by looking

at the status tag from the previous command’s output:

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2025-03-03T18:22:32Z"

status: "True"

type: PodReadyToStartContainers

- lastProbeTime: null

lastTransitionTime: "2025-02-19T21:02:51Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2025-03-03T18:22:32Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2025-03-03T18:22:32Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2025-02-19T21:02:51Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://c4c6f639d1569c84dae2188d3c99cb4164a5bade4d1f402f622edeccb69e2dd6

image: nginx:latest

imageID: docker-pullable://nginx@sha256:9d6b58feebd2dbd3c56ab5853333d627cc6e281011cfd6050fa4bcf2072c9496

lastState:

terminated:

containerID: docker://05b8a06f19bcba31e905ebe54ca995e88f9dd43899d7ff2a48c1505fb96663c0

exitCode: 0

finishedAt: "2025-02-20T21:51:42Z"

reason: Completed

startedAt: "2025-02-20T20:27:29Z"

name: nginx

ready: true

restartCount: 3

started: true

state:

running:

startedAt: "2025-03-03T18:22:32Z"

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-lwtpw

readOnly: true

recursiveReadOnly: Disabled

hostIP: 192.168.5.15

hostIPs:

- ip: 192.168.5.15

phase: Running

podIP: 10.42.0.58

podIPs:

- ip: 10.42.0.58

qosClass: BestEffort

startTime: "2025-02-19T21:02:51Z"

As you can see, it is long and not so human-friendly. You can see some interesting

stuff there, but you need more readable output with more details and relevant information.

This format, yaml or the json format, is suitable if you push it to some application.

For us humans, it is better to use the describe command:

❯ kubectl describe pod nginx

Name: nginx

Namespace: default

Priority: 0

Service Account: default

Node: lima-rancher-desktop/192.168.5.15

Start Time: Wed, 19 Feb 2025 22:02:51 +0100

Labels: run=nginx

Annotations: <none>

Status: Running

IP: 10.42.0.58

IPs:

IP: 10.42.0.58

Containers:

nginx:

Container ID: docker://c4c6f639d1569c84dae2188d3c99cb4164a5bade4d1f402f622edeccb69e2dd6

Image: nginx

Image ID: docker-pullable://nginx@sha256:9d6b58feebd2dbd3c56ab5853333d627cc6e281011cfd6050fa4bcf2072c9496

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 03 Mar 2025 19:22:32 +0100

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Thu, 20 Feb 2025 21:27:29 +0100

Finished: Thu, 20 Feb 2025 22:51:42 +0100

Ready: True

Restart Count: 3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-lwtpw (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-lwtpw:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SandboxChanged 28m kubelet Pod sandbox changed, it will be killed and re-created.

Normal Pulling 28m kubelet Pulling image "nginx"

Normal Pulled 27m kubelet Successfully pulled image "nginx" in 27.484s (27.484s including waiting). Image size: 191998640 bytes.

Normal Created 27m kubelet Created container nginx

Normal Started 27m kubelet Started container nginx

Most of the time, you’ll be looking into:

- events, so you can see what’s happening with your Pod during scheduling.

- conditions to understand your Pod’s status.

- containers, especially their state and previous state.

Of course, this depends on the issue you are trying to figure out.

Example: Wrong Image

Let’s create a new Deployment:

❯ kubectl create deployment my-deployment --image=nginx2

When you execute it, you’ll get messages like: deployment.apps/my-deployment created. So,

all sounds good. Let’s check it out:

❯ k get deployments.apps my-deployment

NAME READY UP-TO-DATE AVAILABLE AGE

my-deployment 0/1 1 0 85s

Ok, it is not been so long. Maybe it needs more time, right? Wait a bit and try again:

❯ k get deployments.apps my-deployment

NAME READY UP-TO-DATE AVAILABLE AGE

my-deployment 0/1 1 0 2m42s

Ok, now it is time to start panicking… Just joking. In most cases, two minutes should be enough to start a Pod unless something is wrong.

In this case, there is something wrong. Let’s try to figure out why. First, we

can try to check deployment my-deployment:

❯ kubectl describe deployment my-deployment

But all should be fine there. Let’s check the pods:

❯ kubectl get pods -l app=my-deployment

NAME READY STATUS RESTARTS AGE

my-deployment-cd98b4847-4kct6 0/1 ImagePullBackOff 0 5d16h

That means the image used for my-deployment cannot be pulled. Let’s check

the details of the Pod:

❯ kubectl describe pod my-deployment-cd98b4847-4kct6

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5d16h default-scheduler Successfully assigned default/my-deployment-cd98b4847-4kct6 to lima-rancher-desktop

Normal Pulling 5d16h (x4 over 5d16h) kubelet Pulling image "nginx2"

Warning Failed 5d16h (x4 over 5d16h) kubelet Failed to pull image "nginx2": Error response from daemon: pull access denied for nginx2, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

Warning Failed 5d16h (x4 over 5d16h) kubelet Error: ErrImagePull

Warning Failed 5d16h (x6 over 5d16h) kubelet Error: ImagePullBackOff

Normal BackOff 5d16h (x7 over 5d16h) kubelet Back-off pulling image "nginx2"

Warning FailedMount 17m (x2 over 18m) kubelet MountVolume.SetUp failed for volume "kube-api-access-kklq6" : object "default"/"kube-root-ca.crt" not registered

Normal SandboxChanged 17m kubelet Pod sandbox changed, it will be killed and re-created.

Normal Pulling 16m (x4 over 17m) kubelet Pulling image "nginx2"

Warning Failed 15m (x4 over 17m) kubelet Failed to pull image "nginx2": Error response from daemon: pull access denied for nginx2, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

Warning Failed 15m (x4 over 17m) kubelet Error: ErrImagePull

Warning Failed 15m (x5 over 17m) kubelet Error: ImagePullBackOff

Normal BackOff 2m56s (x57 over 17m) kubelet Back-off pulling image "nginx2"

Let’s focus just on the events. We see that the Pod fails to pull the image “nginx2” because it does not exist or may require a ‘docker login’. This is indicated by the warning messages “Failed to pull image” and “Error: ErrImagePull”. Let’s fix it:

❯ kubectl set image deployment/my-deployment nginx2=nginx:latest

deployment.apps/my-deployment image updated

Check again:

❯ kubectl get deployment my-deployment

NAME READY UP-TO-DATE AVAILABLE AGE

my-deployment 1/1 1 1 5d16h

All good now.

Example: Pod crashing

Let’s create a new deployment. Save below in the file and apply it:

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: stress

name: stress

spec:

replicas: 1

selector:

matchLabels:

app: stress

template:

metadata:

labels:

app: stress

spec:

containers:

- image: polinux/stress

command:

- stress

args: ["--vm", "1", "--vm-bytes", "15M", "--vm-hang", "1"]

name: stress

resources:

limits:

memory: 20Mi

requests:

memory: 10Mi

Wait for the pods to be ready:

❯ kubectl get pods -w

NAME READY STATUS RESTARTS AGE

stress-7d6468d54-b6mhb 1/1 Running 1 (22h ago) 22h

Now let’s set the memory limit to 10Mi:

❯ kubectl set resources deployment stress -c stress --limits=memory=10Mi

deployment.apps/stress resource requirements updated

Check the status:

❯ kubectl get pods -l app=stress --watch

NAME READY STATUS RESTARTS AGE

stress-6c9c8f8d6b-98z7d 0/1 OOMKilled 2 (34s ago) 54s

stress-7d6468d54-b6mhb 1/1 Running 1 (22h ago) 22h

stress-6c9c8f8d6b-98z7d 0/1 CrashLoopBackOff 2 (16s ago) 58s

As you can see, the Pod is crashing due to the memory limit being exceeded. Let’s describe it:

❯ kubectl describe pod stress-6c9c8f8d6b-98z7d

Name: stress-6c9c8f8d6b-98z7d

Namespace: default

Priority: 0

Service Account: default

Node: lima-rancher-desktop/192.168.5.15

Start Time: Mon, 10 Mar 2025 20:10:36 +0100

Labels: app=stress

pod-template-hash=6c9c8f8d6b

Annotations: <none>

Status: Running

IP: 10.42.0.107

IPs:

IP: 10.42.0.107

Controlled By: ReplicaSet/stress-6c9c8f8d6b

Containers:

stress:

Container ID: docker://9b2ecba9c96c87e50229e6780c5817a26d89db095865ad2422a84e8c0a0edaa4

Image: polinux/stress

Image ID: docker-pullable://polinux/stress@sha256:b6144f84f9c15dac80deb48d3a646b55c7043ab1d83ea0a697c09097aaad21aa

Port: <none>

Host Port: <none>

Command:

stress

Args:

--vm

1

--vm-bytes

15M

--vm-hang

1

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: OOMKilled

Exit Code: 1

Started: Mon, 10 Mar 2025 20:12:54 +0100

Finished: Mon, 10 Mar 2025 20:12:54 +0100

Ready: False

Restart Count: 4

Limits:

memory: 10Mi

Requests:

memory: 10Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-58ffj (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-58ffj:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m32s default-scheduler Successfully assigned default/stress-6c9c8f8d6b-98z7d to lima-rancher-desktop

Normal Pulled 2m23s kubelet Successfully pulled image "polinux/stress" in 9.201s (9.201s including waiting). Image size: 9744175 bytes.

Normal Pulled 2m14s kubelet Successfully pulled image "polinux/stress" in 9.232s (9.232s including waiting). Image size: 9744175 bytes.

Normal Pulled 111s kubelet Successfully pulled image "polinux/stress" in 9.254s (9.254s including waiting). Image size: 9744175 bytes.

Normal Created 74s (x4 over 2m23s) kubelet Created container stress

Normal Started 74s (x4 over 2m23s) kubelet Started container stress

Normal Pulled 74s kubelet Successfully pulled image "polinux/stress" in 9.237s (9.237s including waiting). Image size: 9744175 bytes.

Warning BackOff 37s (x8 over 2m13s) kubelet Back-off restarting failed container stress in pod stress-6c9c8f8d6b-98z7d_default(508f4d6e-5a88-4ccc-b584-a8138dfc9da9)

Normal Pulling 24s (x5 over 2m32s) kubelet Pulling image "polinux/stress"

Focus on this section:

...

Containers:

stress:

Container ID: docker://9b2ecba9c96c87e50229e6780c5817a26d89db095865ad2422a84e8c0a0edaa4

Image: polinux/stress

Image ID: docker-pullable://polinux/stress@sha256:b6144f84f9c15dac80deb48d3a646b55c7043ab1d83ea0a697c09097aaad21aa

Port: <none>

Host Port: <none>

Command:

stress

Args:

--vm

1

--vm-bytes

15M

--vm-hang

1

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: OOMKilled

Exit Code: 1

Started: Mon, 10 Mar 2025 20:12:54 +0100

Finished: Mon, 10 Mar 2025 20:12:54 +0100

Ready: False

Restart Count: 4

...

As you can see, the container is crashing due to an Out Of Memory (OOM) error. This is because the container is trying to allocate more memory than is available on the node. To fix this issue, we should increase the container’s memory request limit.

❯ kubectl set resources deployment stress -c stress --limits=memory=15Mi

deployment.apps/stress resource requirements updated

This was intentionally set to be OOMKilled. But sometimes, you can have a case

when the container is restarting periodically. You notice that there are sporadical

service interruptions, but at first glance, everything is fine. Then, you should

check if the Restart Count is greater than 0. For this reason, check the Last State

section. There, you can find indications of what is going on.

In this case, I only had one container in the Pod. But sometimes, you can have a case when multiple containers are in the Pod. In such cases, you should check the state of all containers.

Missing configuration

Let’s say you have a Deployment like this:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: stress-missing-cm

name: stress-cm

spec:

replicas: 1

selector:

matchLabels:

app: stress-missing-cm

template:

metadata:

labels:

app: stress-missing-cm

spec:

containers:

- image: polinux/stress

command:

- stress

args: ["--vm", "1", "--vm-bytes", "15M", "--vm-hang", "1"]

name: stress

resources:

limits:

memory: 20Mi

requests:

memory: 10Mi

env:

- name: LOG_LEVEL

valueFrom:

configMapKeyRef:

name: env-config

key: log_level

Save it on file stress-missing-cm.yaml and apply it with kubectl apply -f stress-missing-cm.yaml.

Wait for the pod to be created and check its status with kubectl get pods -l app=stress-missing-cm -w.

❯ kubectl get deployments stress-cm --watch

NAME READY UP-TO-DATE AVAILABLE AGE

stress-cm 0/1 1 0 3m5s

Ok, let’s check Pods:

❯ kubectl get pods -l app=stress-cm --watch

No pods? What is going on? Now, you can not use kubectl describe to determine what

is happening. However, you should know that K8s Deployment does not handle Pods.

It actually handles ReplicaSets, which in turn manages Pods. So, let’s look for

the right ReplicaSets and figure out what is happening.

❯ kubectl get replicasets

NAME DESIRED CURRENT READY AGE

stress-5b9f9dfb49 0 0 0 23m

stress-6c9c8f8d6b 0 0 0 22h

stress-74946f474c 0 0 0 22h

stress-7d6468d54 1 1 1 22h

stress-94756c79f 0 0 0 22h

stress-94b954f85 0 0 0 22h

stress-cm-57d6cdbc9f 1 1 0 6m42s

One I’m looking for is stress-cm-57d6cdbc9f. Let’s describe it:

❯ kubectl describe replicasets stress-cm-57d6cdbc9f

Name: stress-cm-57d6cdbc9f

Namespace: default

Selector: app=stress-missing-cm,pod-template-hash=57d6cdbc9f

Labels: app=stress-missing-cm

pod-template-hash=57d6cdbc9f

Annotations: deployment.kubernetes.io/desired-replicas: 1

deployment.kubernetes.io/max-replicas: 2

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/stress-cm

Replicas: 1 current / 1 desired

Pods Status: 0 Running / 1 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=stress-missing-cm

pod-template-hash=57d6cdbc9f

Containers:

stress:

Image: polinux/stress

Port: <none>

Host Port: <none>

Command:

stress

Args:

--vm

1

--vm-bytes

15M

--vm-hang

1

Limits:

memory: 20Mi

Requests:

memory: 10Mi

Environment:

LOG_LEVEL: <set to the key 'log_level' of config map 'env-config'> Optional: false

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 10m replicaset-controller Created pod: stress-cm-57d6cdbc9f-qq4pt

Ok, under the Events section, the Pod was created successfully. But I can not list

this Pod with kubectl get pod -l app=stress-missing-cm. Let’s try to get all Pods:

❯ kubectl get pods

NAME READY STATUS RESTARTS AGE

stress-7d6468d54-b6mhb 1/1 Running 1 (22h ago) 22h

stress-cm-57d6cdbc9f-qq4pt 0/1 CreateContainerConfigError 0 17m

Oh, the pods are created, but there is a configuration error. To find out what exactly:

❯ kubectl describe pod stress-cm-57d6cdbc9f-qq4pt

Name: stress-cm-57d6cdbc9f-qq4pt

Namespace: default

Priority: 0

...

Controlled By: ReplicaSet/stress-cm-57d6cdbc9f

Containers:

stress:

...

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 20m default-scheduler Successfully assigned default/stress-cm-57d6cdbc9f-qq4pt to lima-rancher-desktop

Normal Pulled 20m kubelet Successfully pulled image "polinux/stress" in 9.179s (9.179s including waiting). Image size: 9744175 bytes.

Normal Pulled 19m kubelet Successfully pulled image "polinux/stress" in 9.227s (9.227s including waiting). Image size: 9744175 bytes.

Normal Pulled 19m kubelet Successfully pulled image "polinux/stress" in 9.21s (9.21s including waiting). Image size: 9744175 bytes.

Normal Pulled 19m kubelet Successfully pulled image "polinux/stress" in 9.206s (9.207s including waiting). Image size: 9744175 bytes.

Normal Pulled 18m kubelet Successfully pulled image "polinux/stress" in 9.247s (9.247s including waiting). Image size: 9744175 bytes.

Normal Pulled 18m kubelet Successfully pulled image "polinux/stress" in 9.186s (9.186s including waiting). Image size: 9744175 bytes.

Normal Pulled 18m kubelet Successfully pulled image "polinux/stress" in 9.205s (9.205s including waiting). Image size: 9744175 bytes.

Warning Failed 17m (x8 over 20m) kubelet Error: configmap "env-config" not found

Normal Pulled 17m kubelet Successfully pulled image "polinux/stress" in 9.342s (9.342s including waiting). Image size: 9744175 bytes.

Normal Pulled 15m kubelet Successfully pulled image "polinux/stress" in 9.226s (9.226s including waiting). Image size: 9744175 bytes.

Normal Pulling 11s (x56 over 20m) kubelet Pulling image "polinux/stress"

I can see that the image is being pulled, but the Pod can not be created because the ConfigMap “env-config” is missing.

Conclusion

As you can see from these two short examples, you can use kubectl describe to get

detailed information about the state of your pods and containers. This can help you

troubleshoot issues and understand what is happening with your pods. But not only

with Pods but with other resources as well.

Logs

You can use the kubectl logs command to get logs from a Pod. First, let’s check

all pods running in the cluster:

❯ kubectl get -A pods

NAMESPACE NAME READY STATUS RESTARTS AGE

default stress-7d6468d54-b6mhb 1/1 Running 1 (23h ago) 23h

default stress-cm-57d6cdbc9f-qq4pt 0/1 CreateContainerConfigError 0 46m

kube-system coredns-ccb96694c-4wfcd 1/1 Running 9 (23h ago) 37d

kube-system helm-install-traefik-crd-g5qtm 0/1 Completed 0 37d

kube-system helm-install-traefik-njgh2 0/1 Completed 1 37d

kube-system local-path-provisioner-5cf85fd84d-mnm84 1/1 Running 9 (23h ago) 37d

kube-system metrics-server-5985cbc9d7-96b42 1/1 Running 9 (23h ago) 37d

kube-system svclb-traefik-8ca69ac6-w26dv 2/2 Running 18 (23h ago) 37d

kube-system traefik-5d45fc8cc9-54q2b 1/1 Running 9 (23h ago) 37d

I would like to see logs from the pod “svclb-traefik-8ca69ac6-w26dv”. This Pod has

two containers running inside it. To figure out container names, I can use the

kubectl describe or kubectl get:

❯ kubectl -n kube-system describe pod svclb-traefik-8ca69ac6-w26dv

...

Containers:

lb-tcp-80:

Container ID: docker://429613e67f0557c16c581c4b57fd340d8895152d9375536c115e3cfa87fff89c

Image: rancher/klipper-lb:v0.4.9

...

lb-tcp-443:

Container ID: docker://7296d81188f743355d05b2538c8f88e894d17a94bb2aafa6ce3fae563cb65c8b

Image: rancher/klipper-lb:v0.4.9

Image ID: docker://sha256:11a5d8a9f31aad9a53ff4d20939a81ccd42fa1a47f4e52aac9ae34731d9553ee

Port: 443/TCP

Host Port: 443/TCP

State: Running

...

To see logs from the container lb-tcp-80:

❯ kubectl -n kube-system logs svclb-traefik-8ca69ac6-w26dv lb-tcp-80

To see logs from the container lb-tcp-443:

❯ kubectl -n kube-system logs svclb-traefik-8ca69ac6-w26dv lb-tcp-443

If you want to monitor logs, you need to add the option following -f:

❯ kubectl -n kube-system logs -f svclb-traefik-8ca69ac6-w26dv lb-tcp-80

Summary

This section covered getting logs from a Pod using the kubectl logs command. We

also learned how to monitor logs using the -f option. Finally, we saw how to get

logs from a container inside a Pod.

These two kubectl commands are helpful for troubleshooting and debugging issues

in Kubernetes clusters. They allow you to quickly access logs from containers running

inside Pods, which can help you identify and resolve problems more efficiently.